Sketch, refine, rinse and repeat

Building an AI Audio Guide - Part 3

Let’s get to it!

This is the final installment in my three-part series on prototyping an AI audio guide app. If you missed them, here’s where it started: Part 1 and Part 2.

Following my mantra of fast iteration, I decided to just build the most basic thing first.

This will help you cut through the writers block issue and get you into writing the code faster.

That’s usually what we devs want to do, but it can result in poor decisions and harming your project from the start.

Because of that, I prefer a middle-ground solution that addresses both problems.

I call this the coding equivalent of Sketch and Refine.

In art and design, ‘Sketch and Refine’ is a method where an initial sketch is refined over time to create a final piece, improving precision and depth in the design process.

This is an example of that in practice. Source: Digital Art Timelapse.

| Image | Description |

|---|---|

| Initial outline for a semi-realistic digital painting. |

| Coloring and detailed features added. |

| Well-defined portrait with attention to facial nuances. |

| Clothing, textures and hair refinement added. |

| Final stage: last details, textured background and sunlight highlighting. |

The idea is to iterate through multiple versions of the product rather than trying to get it right on the first try. This approach enables collecting real-world feedback, making adjustments, validating assumptions, and gradually improving the product.

A similar concept, often credited to Kent Beck, has long been associated with the Unix Way.

Make It Work. Make It Right. Make It Fast.

So, that’s the plan: to build a very basic prototype and keep iterating on it.

Basic Prototype

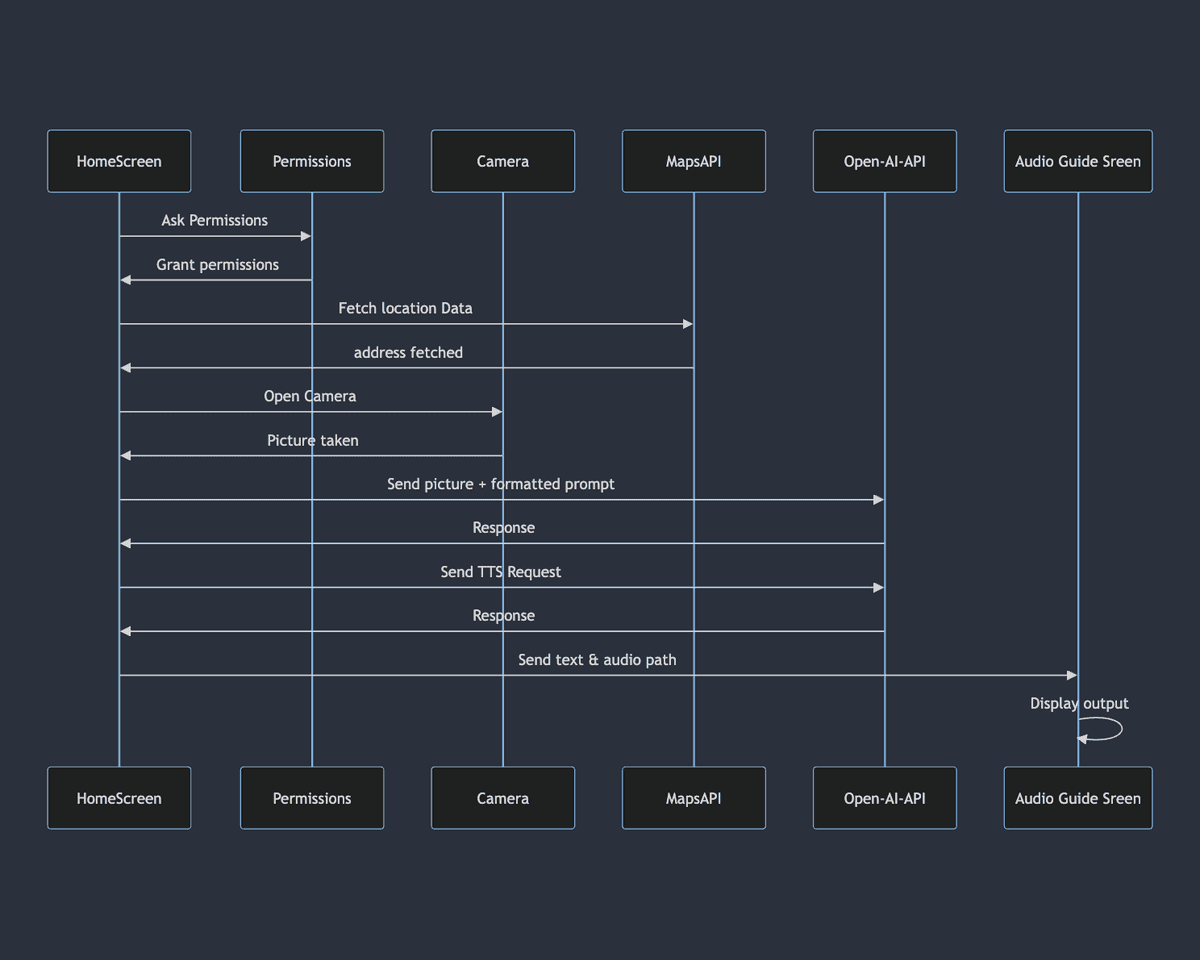

Having the “Sketch & Refine” idea in mind, I decided to plan the basic app flow with three simple screens: Home Screen, Preview Screen, and Audio Guide Screen. This is what it looked like:

App Flow Sequence Diagram

Next, I went for the most simple implementation.

A single screen handled asking for all permissions right at launch. Once those were granted, I’d grab the user’s last

known location using Android’s play-services-location library and feed those coordinates

into Google’s reverse geocoding REST API

to translate them into an address. At the same time, I

used Google’s NearbyPlaces REST API

to get the landmarks close to those coordinates.

Tapping a button would send a camera intent. From this, I got a low-resolution snapshot, converted it into

a base64 string format, and sent all that data to the LLM. Not pretty, but it got the job done!

This was implemented in the quickest and dirtiest way possible. Just make it work. Even the commits were done in a quick and dirty style.

$ git logline

* 35f817e - Implement bot (11/12/2023) <Iury Souza>

* b474f43 - Add support for taking pictures (11/12/2023) <Iury Souza>

* a421a34 - Implement location data fetching (10/12/2023) <Iury Souza>Refining

Once you’ve got that initial prototype working, refactoring the code and seeing the big picture becomes much easier.

Those shortcuts you took suddenly scream at you, and you get the space to make important architectural decisions.

Delaying decisions

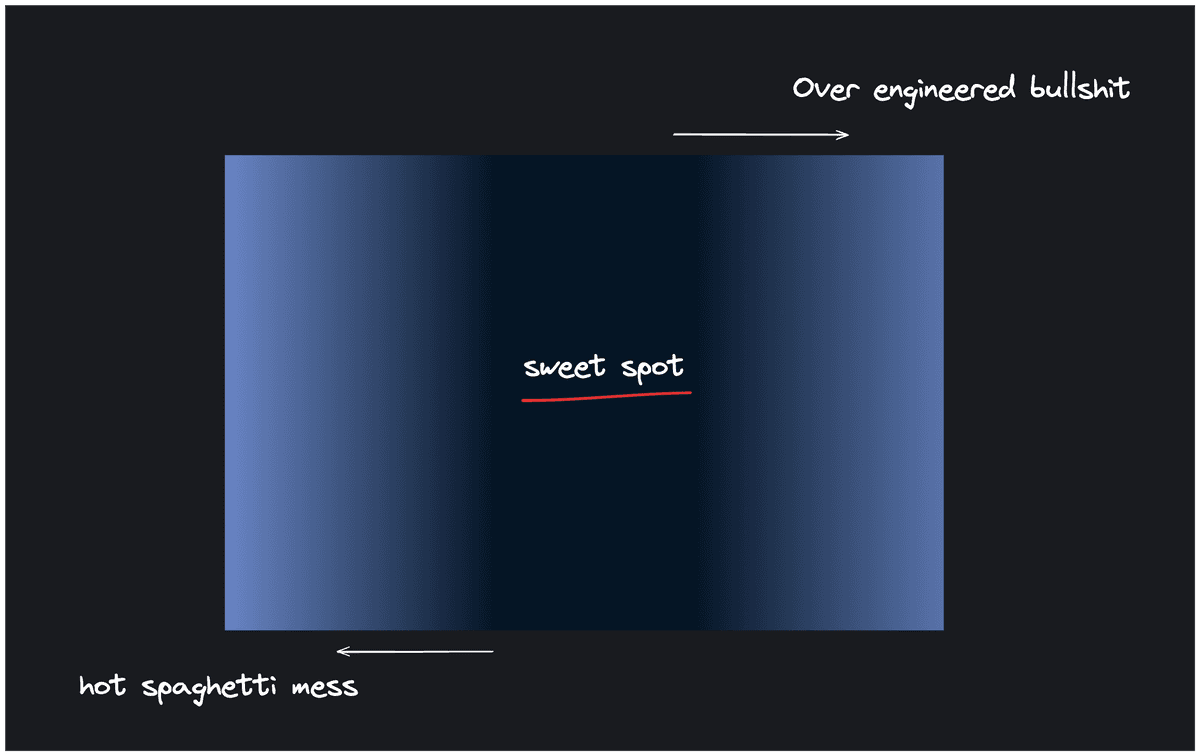

So now, on one hand, you have the opportunity to apply all the fancy architectural patterns you’ve learned, the latest modularization approaches, and even decide which esoteric libraries you’d like to have on the project. Or, go full wild west – a few messy files, a bunch of singletons… why not? Now really, finding the balance is key. There’s this spectrum I always think of:

These choices will shape the entire coding experience from now on, so it’s important to get them right.

The sweet spot depends on your style and the trade-offs you’re willing to make. My rule of thumb is: Do not rush these decisions.

The YAGNI principle is your friend – decide when you have to, when you fully understand the problem and have viable solutions in mind. Similarly, you should let your project’s requirements mature before making decisions that are hard to reverse – if you do, you’ll be sure you’re optimizing for the right goals. And finally, don’t create abstractions just for the sake of it!

Cutting the bullshit

Still under the same idea, these are some of the things I’m not doing at this stage:

- Single module. I don’t need a multi-module architecture. There’s absolutely no benefit in bringing a multi-module setup to the table so early on.

- No custom gradle setup. Getting fancy with gradle build config is also something people waste way too much time. KISS: Keep it simple stupid

- No code gen. I’m avoiding code gen libs, particularly KAPT is out question.

- DI? I went cowboy with a simple Service Locator. No frameworks.

Obviously, if I have to change any of these decisions I can, but I don’t need to commit to any of them yet.

This ain’t no Wild West either

I’m not gonna go full cowboy either. I’m still following basic coding principles and standards. Separating concerns, writing tests (where it’s useful at this point), and keeping the code clean (such a loaded term) and readable, of course. I’m even using a simple MVI architecture to keep things organized and consistent.

The MVI thingy looks a bit like this. Consumers can send events, listen to State and Effect changes. It gets the

job done,

without being overcomplicated. It also forces the implementations to be consistent and this is actually what I’m looking

for here.

I generally shouldn’t have to spend a lot of brain cycles whenever I need to implement something simple. There should be

a standard way of doing common things in this part of the app.

class UserContextViewModel(...) : MVIViewModel<

UserContextViewEvent,

UserContextViewState,

UserContextViewEffect

>() {

override fun setInitialState() = {...}

override fun handleEvent(event: UserContextViewEvent) {...}

}Besides that I didn’t add the standard clean architecture components like repositories or usecases. I settle

with simpler abstractions like LocationProvider or GoogleMapsApi, a simple HTTP Client wrapper that exposed the

Google’s REST APIs.

I didn’t want to add a lot of layers here because I just didn’t know exactly what I was building. So making things easy to refactor was important.

To me, this series of decisions and compromises is what makes the project enjoyable to work with. It’s a side project, you don’t need to make your life harder than it needs to be. It’s all about finding the right balance between speed and quality.

Tiding things up

At this point we’re still only dealing with the happy path, but now we add:

- A permissions pager with explanations, etc

- A Gradle versions catalog (only Gradle change, promise)

- A Navigation library

I also Refactored the code to use service locators and that basic MVI architecture and finally define theme and colors properly.

It still looked ugly, but internally it was much better.

Dealing with LLM Prompts

We’re all used to dealing with HTTP-based APIs, specially RESTful APIs or any kind of RPC. This is the long-standing norm in most software.

These days we have more sophisticated protocols like gRPC or GraphQL. While they introduce new features and improvements they’re fundamentally under the same paradigm. In this case, remotely accessing data stored elsewhere.

LLMs on the other hand unlock a different monster. We as a client application can now effectively tell this black-box

what we

want it to do, via a prompt and it will happily answer us. But not only that. Via some prompt engineering techniques

you can alter the way it answers.

Change for example the format of the answer. Maybe you want the answer to contain some metadata about the text, so you

can ask for it to answer in Markdown format. Or maybe you want it to answer in a particular json structure. You can even

ask it for things it doesn’t know, and it will happily hallucinate an answer for you.

This is a brave new world for anyone, like me, who has never worked with this kind of tech before.

Down the Prompt Engineering rabbit hole ⤵️

Well, like I said in Part 1, one could say that I was an early adopter of this tech, as a normal user at least. So I knew about the basic prompting text style and was able to come up with this prompt.

My initial prompt:

You are a tour guide. You have a laid back personality and like to

enthusiastically

talk about monuments and history. You're good at

providing facts and bits of trivia of the places people ask you about.

You can also understand city addresses and can pinpoint any location

of the city of `input.city` just with its address.

A tourist just sent you a picture and this address: `input.address`

of a place in `input.city`. The place is near these

locations: `input.getLocations()`

The tourist asks you "what's in the picture"?

Please answer this person in the style of a tourist guide

who's enthusiastic about history.

- Immediately start talking about the monument or historic place.

- Don't start the text with: "Ah, what a splendid

sight to behold!" or similar phrases.

- Do not repeat the address or the city name in your answer.

- It's important that you answer in a way that's

informative and interesting.

- Try to figure out which particular part of the monument

the picture is focusing on and talk about that.Nothing outstanding there, I just provided it with the context and gave it some instructions on how to talk. For a particular address that I provided it would return this kind of output.

Output:

Right before your eyes, you're looking at a powerful photographic

display located near the historic Bornholmer Strasse in Berlin,

which is part of the larger Place of 9 November installation.

This particular image captures an extraordinary moment in history,

an intimate glimpse into the jubilant faces of East Berliners

at the Bornholmer Strasse border crossing on the

night of November 9, 1989 – a night that altered the course of history.

This was the first crossing to open during the fall of the Berlin Wall, marking

a pivotal point in the reunification of Germany. People streamed through here,

in disbelief and elation, as the barriers that once divided a city, and

symbolically the world, were lifted.

In this surrounding area, the picturesque Kirschblutenpfad, or Cherry

Blossom Path, awaits you during the spring, standing in contrast

to the Bosebrucke, another witness to the changes that swept

across this city.

This installation invites contemplation and serves as a portal to the past,

allowing visitors to stand amidst history retold through imagery. As you wander

alongside this precious slice of the city's timeline, you can almost hear

the echoes of cheers and the rustle of footsteps that marked the beginning

of a new era. it's not just a photograph, it is a testimony to unity, to change,

and to the enduring spirit of berliners.Decent response, but I thought it could do better.

Time to read the manual.

Prompt Engineering Techniques

At this point I started to look into the different techniques to improve the quality of the responses and I found a few of them that I thought were interesting.

Role prompting

This is the one is where you give the AI a role and ask it to play it, which usually increases the quality of responses quite a bit. This is a basic one, and I realized that I was already doing it.

You are a tour guide.

The role you assign serves as a guideline for the kind of response you’re looking for. It’s good for bringing out specific styles, tones, or levels of complexity in responses.

System Roles

Instead of sending the role prompt via the standard user prompt field, you can set it as a system prompt. Which is said to be injected into every question.

It can be instructions guiding the LLM Behavior like:

- Assistant description: profession, persona, etc

- Context: information, preferences, etc

- Response format: email, summary,

json - Boundaries: don’t talk about this, don’t repeat that, etc

Solo Performance Prompting (SSP)

This is a method where a single Large Language Model (LLM) acts like multiple experts (or personas) working together to solve complex tasks.

This is important because it improves the model’s ability to handle tasks that require deep knowledge and complex reasoning, making it more effective and versatile.

A basic example of its use could be an LLM playing the roles of a historian, a scientist, and a travel guide to answer a question about the historical and scientific significance of the Eiffel Tower.

The model, using SPP, would combine insights from these different personas to provide a comprehensive and well-rounded answer.

Few shot prompting

This is a technique where you provide a few examples that demonstrate the kind of output you’re looking for before sending the actual query.

These few examples (or “shots”) give the AI with a pattern to follow when dealing with the actual task.

Zero shot prompting

By appending words like “Let’s think step by step.” to the end of a question, LLMs are able to generate a chain of thought that answers the question. From this chain of thought, they are able to extract more accurate answers.

What worked for me

You see, this still feels more like invoking incantations than doing actual software development. Besides, the qualitative measurement of these changes are super subjective and hard to assert. Most of the times you’re left with going with your feeling.

But some simple tricks can make working with this way more manageable. For example, asking for an output format is super useful to interface with the unstructured nature of the LLMs outputs and the structured nature of our code base.

Role Prompting

This is the most basic one and think we’re all pretty much doing it at this point. Tell the AI its job, its role

You are a tour guide. You have a laid back personality and like to

enthusiastically talk about monuments and history. You've been

living in `input.city` for the past 30 years and know the city like

the back of your hand. Your main target audience are tourists aged from

18 to 45, so you have a casual and friendly speech tone.Output formatting

This is the best example of managing this complexity.

Your output format is a json file without the code block

(`json content`)

{

"title": "string",

"content": "string"

}

The `title` should describe the monument

or historic place. Keep it short, 5 words max.This was great. Now with the title I could actually create a screen instead of justing rendering a text.

There was still a problem though. Whenever I would send a picture of something that’s not a monument, it would start hallucinating (technical term for talking gibberish) and going into tangents just to keep talking. So I added this to the prompt:

Your output format is a json file without the code block

(`json content`)

{

"isValidRequest": "boolean"

"title": "string",

"content": "string"

}

The `isValidRequest` field should be `false` if the image is **NOT**

related to a monument or historic place and `true` otherwise.

When `isValidRequest` is `false`, the `title` and `content` should be omitted.

The `title` should describe the monument or historic place.

Keep it short, 5 words max.Now, I had error handling built-in the output.

Few shot prompting

This idea worked pretty well. What I did was basically pick one of the outputs and change the parts which I didn’t like. Replaced some words, changed the tone of the writing and etc. This caused the other answers to follow a general underlying pattern that it picked from that writing. Which I feel is a great idea.

Sometimes it’s hard to explain exactly what you want, so an example fills in the gaps for you.

Out of the prompt rabbit hole ⤴️

You can spend quite a while improving your prompts, there are many other techniques that you can and should try. My final prompt had quite few more things into it, but I feel there’s a point where adding new things stop being effective, so it’s a balancing act too. And like I said in the beginning all this few more like throwing a bunch of shots into a wall and seeing what sticks. So keep that in mind.

Back to refining - Making it pretty-er

Prototypes are nice and all but a nice app is much better. But what kind of UI should we have here?

This is sort of an unusual app so I couldn’t just copy take inspiration from another bigger app.

I as a user just wanted to go from camera to audio guide as fast as possible. The main problem was big loading times and how to display the audio guide in an interesting way. This app UI should be minimal anyway so this shouldn’t be rocket science, not for a side-project.

I had some notes about what I wanted to build:

- Home: catchphrase: (CallToAction)

- Start camera

- Take picture

- Show fancy animation for uploading picture

- Request audio content and stylized img based on the picture uploaded

- Show blurred out stylized pic as background for text streaming.

- play audio on top of blurred backgroundSo I had 3 main UI problems to solve.

- Home

- Loading

- Playback

Massive loading times ong loading times are a real pain point – my app was taking an average of 35 seconds to generate text and audio! That’s a lot of waiting. The plan was to eventually implement text streaming chatGPT which improves the perception of waiting a lot. Implementing that was part of the plan, but not at this stage.

I wanted to get the basics down first, and since I would still need to wait for the audio to be generated anyway, so I would still need a decent loading screen.

So I went with a combination of lottie animations and displaying randomly selected sentences in the style of Maxis (from The Sim & Sim City series) loading screens (try to spot them on the app demo video). This was fun bit that I added, it may start to feel repetitive after a while, at this stage it got the job done (making waiting easier).

Random technical-sounding loading sentences

Karaoke Playback

The second thing I wanted to solve was the Playback screen. I decided to implement a feature similar to spotify’s synced lyrics. Here, I had the audio and the text that would be played, but I didn’t have the actual speech rhythm and you can’ t expect every word to be said following the same speech rhythm pattern, otherwise it would sound awfully like the old windows TTS from 20 years ago. These days we have more natural sounding voices, which bring this problem back.

My solution was to get the current audio playback percentage and sync that to the scrollState of the component that

would display the text.

This component would have fading text borders in a way that the current sentence being played would be displayed in the

center and the rest of the text would be faded out. This kinda creates the illusion of a karaoke style component, even

when it’s not perfect.

Good enough for me.

The next piece of the puzzle was making the text more easier to parse for us. For that, I just modified the prompt to get it format the text with markdown, so I could render it in a more interesting way. I added this to the prompt

The text is in the content field should use bold markdown formatting for the

following cases:

- Dates.

- Names of places.

- Addresses.

- Names of people.

- Historic events.

- Name of monuments.

- Names of historic figures.To do that I used the nice compose-rich-text library which literally takes of everything.

Dev Mode

Geolocation coordinates are crucial in this app. To fine-tune the output, you’ll typically provide a picture and a lat/lng. Real-world testing gets repetitive fast – you don’t want to take a new picture for every test.

That’s why I created a Dev Mode screen. Here, you can enter a picture URL and paste some coordinates, skipping the usual photo capture and location tracking. Later I even expanded this system to stub out the ChatGPT API for better testing the karaoke flow.

This setup was a lifesaver during UI development. With quick tweaks and no cumbersome photo-taking, I could iterate on the design much faster.

Ironing it out

Now I only wanted to do a couple of improvements like finally adding text streaming support and supporting Google’s Gemini SDK.

Text streaming was pretty easy to support, since it was built-in in both sdks and my implementation was flexible enough to support it. So while text was streaming I displayed it like a loading page, and only showed the final audio guide karaoke after the TTS was loaded.

Finally, Gemini was released just a week prior this stage, so I wanted to check that out. Living in the EU I knew it was blocked, but that didn’t stop me from trying. So just I added an dev-mode option to enable it and it worked as expected - a bit underwhelming at this point, but I wanted to have the freedom to switch engines if I wanted to.

App Pitch

Here’s a quick pitch of the app. It’s not the final version, I posted this before it had text streaming support.

I've been toying with the chat-GPT vision API since last week and I've put together this prototype.

— iury souza (@IurySza) December 22, 2023

I'm still exploring what it's capable of, but so far I'm impressed.

Apart from improving the rubbish UX/UI, the next step would be to use word embeddings to improve its accuracy pic.twitter.com/cABPZ2mY5U

Final touches

This is the final version of the app I shared just before taking my yearly 20 days off to travel to Brazil.

I finished ironing out some things here like rendering partially loaded content while streaming text and improving the "karaoke" audio guide screen. I try to keep the highlighted text in sync with the audio and added Netflix inspired skipping button animations 😛.

— iury souza (@IurySza) January 9, 2024

Also Gemini (+) pic.twitter.com/yQLzF6FJBP

Next steps

Okay, I’m excited to get this app published! Before a full release, I’ll definitely take a better look into the pricing model and try to get it published for beta-testers only the coming weeks.

This is far from a finished product and I know what you might be thinking:

It doesn’t get things right all the time. It hallucinates or it is just stealing content from other people!

And I hear you. I also think it’s not perfect. That’s why I’m not monetising it.

Although there are a few things I could do to improve the quality of the answers by a LOT.

-

RAG (Retrieval Augmented Generation): In sum this let’s the LLM query a vector database for content related to the question and injecting that content into its context, which enables it to pretty much solve the hallucination problem in a lot of cases. You can read more about that here.

-

Google Gemini 1.5: This recent update massively increases the context window. More context means better answers and could help with many of the problems RAG addresses. I’m curious to see where this leads!

Curious about the App?

The app is still not ready for a full release, but would be happy to get some feedback from you. If you’re interested in trying it out, send me an email and I’ll include you in the beta.

Conclusion

This was such a fun project! I can’t remember the last time a new technology blew me away like this. When ChatGPT went mainstream in 2022, it felt like magic. Even now, I’m still amazed by some of the things it can do.

Of course, there are plenty of issues, and it raises serious questions about how we’ll adapt as a society, but part of me can’t help but feel excited about the prospects of integrating this into our apps and tools.

Something I felt about this project from the start was that this will get built by someone. I’m sure that in a few months, a big company will pick this up. Even Zuck talked about how he wants Meta’s Ray-Ban Glasses to be able to answer historical data of a building, so I’m pretty sure that will cover this audio guide use case too. Anyway, it is nice to play around with the idea while there’s still nothing out there.

I learned a ton about LLMs and how to turn them into real products. Plus, it was fun to build some weird UI components!